Malware uses AI to attack and spread

Today on the 2022 symposium of the Norwegian AI Society, Lothar Fritsch, Aws Jaber Naser and Anis Yazidi presented their article “An overview of artificial intelligence used in malware“. In this survey, they found that malware increasingly deploys AI techniques in order to spread and attack more efficiently.

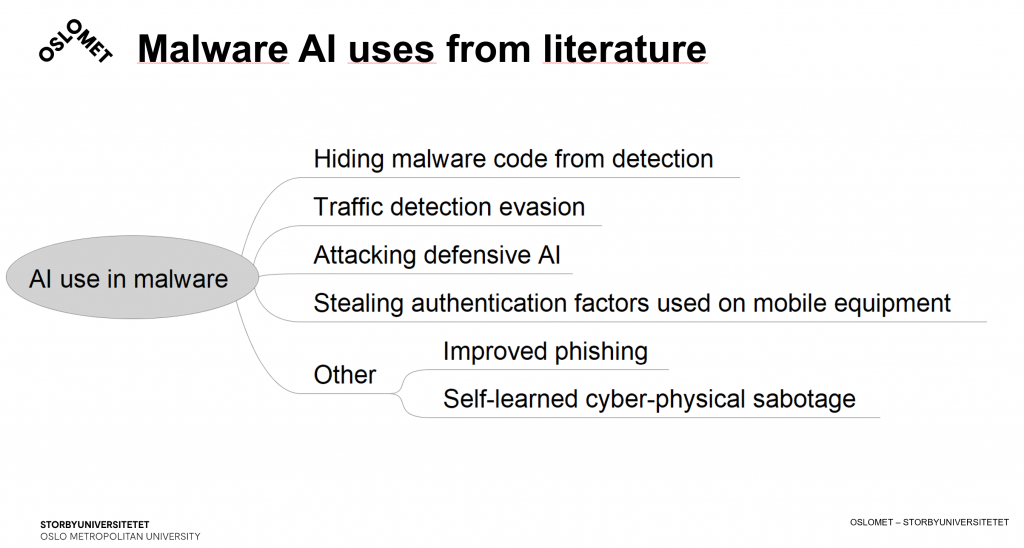

We found that AI is already demonstrated in the following adversarial use cases:

- Direct sabotage of defending AI or ML algorithms;

- Detection evasion through intelligent code perturbation techniques;

- Detection evasion through learning of traffic patterns when scanning systems, communication or connection to command and control infrastructures;

- Black-box-techniques bypassing intrusion detection using generative networks and unsupervised learning;

- Direct attacks predicting passwords, PIN codes;

- Automatic interpretation of user interfaces for application control;

- Self-learning system behavior for undetected automated cyber-physical sabotage;

- Botnet coordination with swarm intelligence, removing need for command and control servers;

- Sandbox detection and evasion with neural networks;

- Hiding malware within images or neural networks.

We conclude that AI is an emerging risk in cybersecurity, as:

- AI deployment in malware is abundant in prototypes and demonstrators;

- AI already used in some malware;

- High potential for automation and autonomy in malware through AI – may deprive information security defenders of defenses such as re-direction of command and control servers;

- AI-enhanced malware is a serious emerging risk for information security.

Interesting finding and reading. I also come through malware that could solve CAPTCHAs to sneak past the CAPTCHA-based authentication methods.