On the origin of quantum advantage, by Maksym Teslyk

Quantum computing seems to be a popular trend all over the word nowadays. Many of us have heard that private companies, e.g., D-Wave Systems, Google quantum AI, IBM with its very latest quantum processor Condor, etc., are working hard on the construction and design of quantum computation facilities. Even more on that, the academic community is also involved in the race – and OsloMet is no exception here.

One may wonder why are we working so hard and investing so much time and resources to build a quantum computer? Maybe all this activity is nothing more but a hype, and the focus on common and well-known classical machines, which we know how to produce, would be a better strategy? What are the benefits of calculations based on quantum algorithms, instead of classical ones?

One may suggest pure math to answer these questions. However, algorithm complexity theory is not of great help. There are plenty of issues which it cannot solve. For example, one may try the NP problem, which is believed to be hard enough to be included in the Millenium Prize Problems listing. And introducing quantum complexity classes just makes the approach even more challenging.

The opposite solution is solely practice. One may design a quantum device able to solve some (abstract) tasks which is unsolvable by any existing (or forthcoming) classical computer. The approach attracts much attention today, but cannot provide any satisfiable answer – see, e.g., the recent efficient simulation of quantum processors.

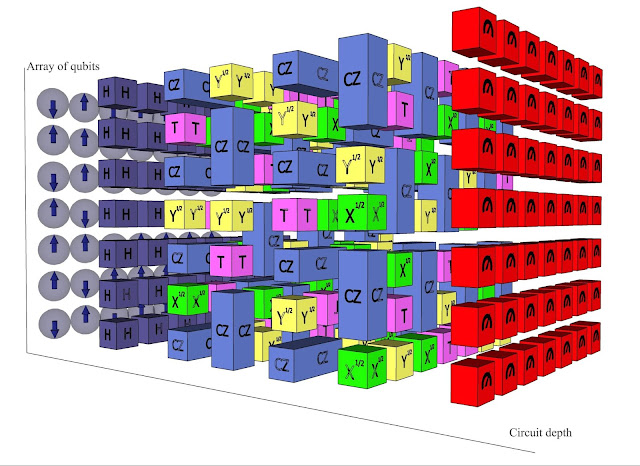

Ok, but we know that the properties of elementary pieces of information exhibit significant differences. For example, any classical bit can be represented as a switch between two mutually excluding classical states. Quantum bits, or qubits, are encoded with the Bloch sphere – they are two-dimensional, unlike bits. Such a seemingly strange and counter-intuitive representation originates from the quantum superposition principle, allowing for a quantum system to contain both mutually excluding outcomes simultaneously. Combined with linearity of unitary operators performing quantum evolution, this allows to hack the computation. Namely, one may encode all the possible combinations of the onset data into a single input state and obtain all the possible outputs in a single run of a quantum processor. Obviously, such a recipe won’t work for classical switches.

So, have we found the answer? On the one hand, both quantum superposition and linearity of operators allow one to use quantum interference at its full power. This can be easily illustrated with the help of, e.g., quantum Bernstein-Vazirani algorithm which outperforms the classical one. On the other hand, the Gottesmann-Knill theorem challenges this and makes the whole approach a bit unclear.

If the properties of information cannot explain the quantum advantage, maybe we should look for something which cannot be reproduced within any classical device at all? How about entanglement? Indeed, this phenomenon, blamed by A. Einstein as a ‘spooky action at a distance’, exhibits the possibility of correlations at the level which is unattainable in the terms of non-quantum physics. It is also known as quantum non-locality and violates Bell inequalities which any classical system, regardless its properties and working principles, should obey.

Entangled states imply the existence of a quantum communication channel and can be used to transfer data far more efficiently than any existing communication networks. These are not just words: we do have such quantum protocols as quantum teleportation or superdense coding, and both are heavily based on entanglement.

So, we see that quantum non-locality may be treated as a quantum resource allowing more efficient information transfer. Moreover, it was shown that if the entanglement which is produced by some quantum circuit is upper-bounded, then it can be efficiently simulated classically. Therefore, one may conclude that entanglement speeds up quantum computation. However, another study could not detect any significant role of this resource in the Shor’s algorithm. Taking into account that the algorithm is one of the most efficient among the ones known to date, we must admit that the role of entanglement in the speed-up requires further clarification.

We may consider the problem at the very abstract level also. Any process obeys some set of rules, which can be formalized in the terms of underlying logic. The formalism was developed almost a century ago. It clearly demonstrates that any classical system obeys the rules of Boolean logic, which can be inferred from the relations among the subsets of a phase space, and that any quantum system is governed by the relations among the linear subspaces of the Hilbert space (quantum logic). The key difference between these two systems originates from the commutation properties of classical functions and quantum operators, respectively.

The Huygens-Fresnel principle allows to compare both logics with such different algebraic structures. Namely, let us consider a wave propagating from point A to B. In the terms of wave optics we know that the transition should take into account all possible paths through space. However, in case the wavelength is negligible, all these additional paths vanish but the one governed by far more simple ray optics. The same procedure may be applied to quantum circuits in terms of the path integral formalism. Taking the de Broglie wavelength to the zeroth limit determines their transition to classical calculations. Unfortunately, the estimation of information lost under the limit does not reproduce the corresponding increase of computational inefficiency. To sum up, we know that quantum advantage exists. This can be easily illustrated with the help of Grover’s search algorithm, which outperforms any classical analog. On the other hand, the origin of the advantage looks fuzzy. And everyone is highly invited to participate in solving the puzzle.